This is an information page. It is not one of Wikipedia's policies or guidelines; rather, its purpose is to explain certain aspects of Wikipedia's norms, customs, technicalities, or practices. It may reflect differing levels of consensus and vetting. |

Robots or bots are automatic processes that interact with Wikipedia (and other Wikimedia projects) as though they were human editors. This page attempts to explain how to carry out the development of a bot for use on Wikimedia projects and much of this is transferable to other wikis based on MediaWiki. The explanation is geared mainly towards those who have some prior programming experience, but are unsure of how to apply this knowledge to creating a Wikipedia bot.

Why would I need to create a bot?[edit]

Bots can automate tasks and perform them much faster than humans. If you have a simple task that you need to perform lots of times (an example might be to add a template to all pages in a category with 1000 pages), then this is a task better suited to a bot than a human.

Considerations before creating a bot[edit]

Reuse existing bots

It is often far simpler to request a bot job from an existing bot. If you have only periodic requests or are uncomfortable with programming, this is usually the best solution. These requests can be made at Wikipedia:Bot requests. In addition, there are a number of tools available to anyone. Most of these take the form of enhanced web browsers with MediaWiki-specific functionality. The most popular of these is AutoWikiBrowser (AWB), a browser specifically designed to assist with editing on Wikipedia and other Wikimedia projects. A mostly complete list of tools can be found at Wikipedia:Tools/Editing tools. Tools, such as AWB, can often be operated with little or no understanding of programming.

Tip

Use Toolhub to explore available tools. |

Reuse codebase

If you decide you need a bot of your own due to the frequency or novelty of your requirements, you don't need to write one from scratch. There are already a number of bots running on Wikipedia and many of these bots publish their source code, which can sometimes be reused with little additional development time. There are also a number of standard bot frameworks available. Modifying an existing bot or using a framework greatly speeds development time. Also, because these code bases are in common usage and are maintained community projects, it is far easier to get bots based on these frameworks approved for use. The most popular and common of these frameworks is Pywikibot (PWB), a bot framework written in Python. It is thoroughly documented and tested and many standardized Pywikibot scripts (bot instructions) are already available. Other examples of bot frameworks can be found below. For some of these bot frameworks, such as PWB, a general familiarity with scripts is all that is necessary to run the bot successfully (it is important to update these frameworks regularly).

Important questions

Writing a new bot requires significant programming ability. A completely new bot must undergo substantial testing before it will be approved for regular operation. To write a successful bot, planning is crucial. The following considerations are important:

- Will the bot be manually assisted or fully automated?

- Will you create the bot alone, or with the help of other programmers?

- Will the bot's requests, edits, or other actions be logged? If so, will the logs be stored on local media, or on wiki pages?

- Will the bot run inside a web browser (for example, written in JavaScript), or will it be a standalone program?

- If the bot is a standalone program, will it run on your local computer, or on a remote server such as the Toolforge?

- If the bot runs on a remote server, will other editors be able to operate the bot or start it running?

How does a Wikipedia bot work?[edit]

Overview of operation

Just like a human editor, a Wikipedia bot reads Wikipedia pages, and makes changes where it thinks changes need to be made. The difference is that, although bots are faster and less prone to fatigue than humans, they are nowhere near as bright as we are. Bots are good at repetitive tasks that have easily defined patterns, where few decisions have to be made.

In the most typical case, a bot logs in to its own account and requests pages from Wikipedia in much the same way as a browser does – although it does not display the page on screen, but works on it in memory – and then programmatically examines the page code to see if any changes need to be made. It then makes and submits whatever edits it was designed to do, again in much the same way a browser would.

Because bots access pages the same way people do, bots can experience the same kind of difficulties that human users do. They can get caught in edit conflicts, have page timeouts, or run across other unexpected complications while requesting pages or making edits. Because the volume of work done by a bot is larger than that done by a live person, the bot is more likely to encounter these issues. Thus, it is important to consider these situations when writing a bot.

APIs for bots

In order to make changes to Wikipedia pages, a bot necessarily has to retrieve pages from Wikipedia and send edits back. There are several application programming interfaces (APIs) available for that purpose.

- MediaWiki API (

api.php). This library was specifically written to permit automated processes such as bots to make queries and post changes. Data is returned in JSON format (see output formats for more details).- Status: Built-in feature of MediaWiki, available on all Wikimedia servers. Other non-Wikimedia wikis may disable or restrict write access.

- There is also an API sandbox for those wanting to test api.php's features.

- Special:Export can be used to obtain bulk export of page content in XML form. See Manual:Parameters to Special:Export for arguments;

- Status: Built-in feature of MediaWiki, available on all Wikimedia servers.

- Raw (Wikitext) page processing: sending an

action=rawor anaction=raw&templates=expandGET request to index.php will give the unprocessed wikitext source code of a page. For example:https://en.wikipedia.org/w/index.php?title=Help:Creating_a_bot&action=raw. An API query withaction=query&prop=revisions&rvprop=contentoraction=query&prop=revisions&rvprop=content&rvexpandtemplates=1is roughly equivalent, and allows for retrieving additional information.- Status: Built-in feature of MediaWiki, available on all Wikimedia servers.

Some Wikipedia web servers are configured to grant requests for compressed (GZIP) content. This can be done by including a line "Accept-Encoding: gzip" in the HTTP request header; if the HTTP reply header contains "Content-Encoding: gzip", the document is in GZIP form, otherwise, it is in the regular uncompressed form. Note that this is specific to the web server and not to the MediaWiki software. Other sites employing MediaWiki may not have this feature. If you are using an existing bot framework, it should handle low-level operations like this.

Logging in

Approved bots need to be logged in to make edits. Although a bot can make read requests without logging in, bots that have completed testing should log in for all activities. Bots logged in from an account with the bot flag can obtain more results per query from the MediaWiki API (api.php). Most bot frameworks should handle login and cookies automatically, but if you are not using an existing framework, you will need to follow these steps.

For security, login data must be passed using the HTTP POST method. Because parameters of HTTP GET requests are easily visible in URL, logins via GET are disabled.

To log a bot in using the MediaWiki API, two requests are needed:

Request 1 – this is a GET request to obtain a login token

Request 2 – this is a POST to complete the login

- URL:

https://en.wikipedia.org/w/api.php?action=login&format=json - POST parameters:

lgname=BOTUSERNAMElgpassword=BOTPASSWORDlgtoken=TOKEN

where TOKEN is the token from the previous result. The HTTP cookies from the previous request must also be passed with the second request.

A successful login attempt will result in the Wikimedia server setting several HTTP cookies. The bot must save these cookies and send them back every time it makes a request (this is particularly crucial for editing). On the English Wikipedia, the following cookies should be used: enwikiUserID, enwikiToken, and enwikiUserName. The enwiki_session cookie is required to actually send an edit or commit some change, otherwise the MediaWiki:Session fail preview error message will be returned.

Main-account login via "action=login" is deprecated and may stop working without warning. To continue login with "action=login", see Special:BotPasswords.

Editing; edit tokens

Wikipedia uses a system of edit tokens for making edits to Wikipedia pages, as well as other operations that modify existing content such as rollback. The token looks like a long hexadecimal number followed by '+\', for example:

- d41d8cd98f00b204e9800998ecf8427e+\

The role of edit tokens is to prevent "edit hijacking", where users are tricked into making an edit by clicking a single link.

The editing process involves two HTTP requests. First, a request for an edit token must be made. Then, a second HTTP request must be made that sends the new content of the page along with the edit token just obtained. It is not possible to make an edit in a single HTTP request. An edit token remains the same for the duration of a logged-in session, so the edit token needs to be retrieved only once and can be used for all subsequent edits.

To obtain an edit token, follow these steps:

- MediaWiki API (api.php). Make a request with the following parameters (see mw:API:Edit - Create&Edit pages).

action=querymeta=tokens

The token will be returned in the

csrftokenattribute of the response.

The URL will look something like this: https://en.wikipedia.org/w/api.php?action=query&meta=tokens&format=json

If the edit token the bot receives does not have the hexadecimal string (i.e., the edit token is just '+\') then the bot most likely is not logged in. This might be due to a number of factors: failure in authentication with the server, a dropped connection, a timeout of some sort, or an error in storing or returning the correct cookies. If it is not because of a programming error, just log in again to refresh the login cookies. The bots must use assertion to make sure that they are logged in.

Edit conflicts

Edit conflicts occur when multiple, overlapping edit attempts are made on the same page. Almost every bot will eventually get caught in an edit conflict of one sort or another, and should include some mechanism to test for and accommodate these issues.

Bots that use the Mediawiki API (api.php) should retrieve the edit token, along with the starttimestamp and the last revision "base" timestamp, before loading the page text in preparation for the edit; prop=info|revisions can be used to retrieve both the token and page contents in one query (example). When submitting the edit, set the starttimestamp and basetimestamp attributes, and check the server responses for indications of errors. For more details, see MediaWiki:API:Edit - Create and Edit pages.

Generally speaking, if an edit fails to complete the bot should check the page again before trying to make a new edit, to make sure the edit is still appropriate. Further, if a bot rechecks a page to resubmit a change, it should be careful to avoid any behavior that could lead to an infinite loop and any behavior that could even resemble edit warring.

Overview of the process of developing a bot[edit]

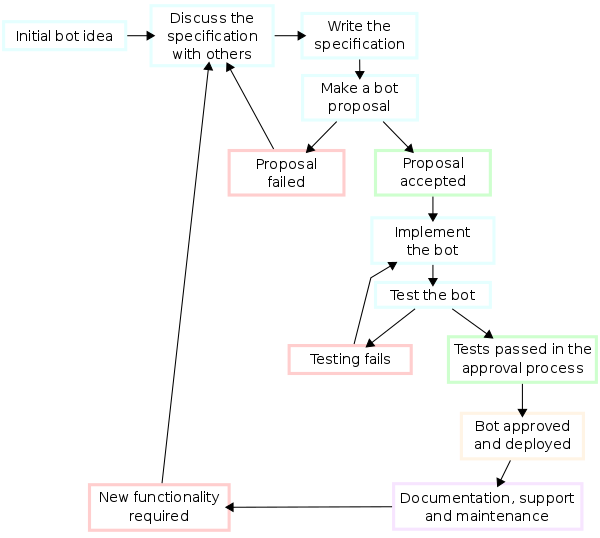

Actually, coding or writing a bot is only one part of developing a bot. You should generally follow the development cycle below to ensure that your bot follows Wikipedia's bot policy. Failure to comply with the policy may lead to your bot failing to be approved or being blocked from editing Wikipedia.

Idea

- The first task in creating a Wikipedia bot is extracting the requirements or coming up with an idea. If you don't have an idea of what to write a bot for, you could pick up ideas at requests for work to be done by a bot.

- Make sure an existing bot isn't already doing what you think your bot should do. To see what tasks are already being performed by a bot, see the list of currently operating bots.

Specification

- Specification is the task of precisely describing the software to be written, possibly in a rigorous way. You should come up with a detailed proposal of what you want it to do. Try to discuss this proposal with some editors and refine it based on feedback. Even a great idea can be made better by incorporating ideas from other editors.

- In the most basic form, your specified bot must meet the following criteria:

- The bot is harmless (it must not make edits that could be considered disruptive to the smooth running of the encyclopedia)

- The bot is useful (it provides a useful service more effectively than a human editor could)

- The bot does not waste server resources.

Software architecture

- Think about how you might create a bot, and which programming language(s) and tools you would use. Architecture is concerned with making sure the software system will meet the requirements of the product as well as ensuring that future requirements can be addressed. Certain programming languages are better suited to some tasks than others, for more details see § Programming languages and libraries.

Implementation

Implementation (or coding) involves turning design and planning into code. It may be the most obvious part of the software engineering job, but it is not necessarily the largest portion. In the implementation stage you should:

- Create an account for your bot. Click here when logged in to create the account, linking it to yours. (If you do not create the bot account while logged in, it is likely to be blocked as a possible sockpuppet or unauthorised bot until you verify ownership)

- Create a user page for your bot. Your bot's edits must not be made under your own account. Your bot will need its own account with its own username and password.

- Add the same information to the user page of the bot. It would be a good idea to add a link to the approval page (whether approved or not) for each function.

Testing

A good way of testing your bot as you are developing is to have it show the changes (if any) it would have made to a page, rather than actually editing the live wiki. Some bot frameworks (such as pywikibot) have pre-coded methods for showing diffs. During the approvals process, the bot will most likely be given a trial period (usually with a restriction on the number of edits or days it is to run for) during which it may actually edit to enable fine-tuning and iron out any bugs. At the end of the trial period, if everything went according to plan, the bot should get approval for full-scale operation.

Documentation

An important (and often overlooked) task is documenting the internal design of your bot for the purpose of future maintenance and enhancement. This is especially important if you are going to allow clones of your bot. Ideally, you should post the source code of your bot on its userpage or in a revision control system (see #Open-source bots) if you want others to be able to run clones of it. This code should be well documented (usually using comments) for ease of use.

Queries/Complaints

You should be ready to respond to queries about or objections to your bot on your user talk page, especially if it is operating in a potentially sensitive area, such as fair-use image cleanup.

Maintenance

Maintaining and enhancing your bot to cope with newly discovered bugs or new requirements can take far more time than the initial development of the software. To ease maintenance, document your code from the beginning.

Major functionality changes of approved bots must be approved.

General guidelines for running a bot[edit]

In addition to the official bot policy, which covers the main points to consider when developing your bot, there are a number of more general advisory points to consider when developing your bot.

Bot best practices

- Set a custom User-Agent header for your bot, per the Wikimedia User-Agent policy. If you don't, your bot may encounter errors and may end up blocked by the technical staff at the server level.

- Use the maxlag parameter with a maximum lag of 5 seconds. This will enable the bot to run quickly when server load is low, and throttle the bot when server load is high.

- If writing a bot in a framework that does not support maxlag, limit the total requests (read and write requests together) to no more than 10/minute.

- Use the API whenever possible, and set the query limits to the largest values that the server permits, to minimize the total number of requests that must be made.

- Edit (write) requests are more expensive in server time than read requests. Be edit-light and design your code to keep edits to a minimum.

- Try to consolidate edits. One single large edit is better than 10 smaller ones.

- Enable HTTP persistent connections and compression in your HTTP client library, if possible.

- Do not make multi-threaded requests. Wait for one server request to complete before beginning another.

- Back off upon receiving errors from the server. Errors such as timeouts are often an indication of heavy server load. Use a sequence of increasingly longer delays between repeated requests.

- Make use of assertion to ensure your bot is logged in.

- Test your code thoroughly before making large automated runs. Individually examine all edits on trial runs to verify they are perfect.

Common bot features you should consider implementing

Manual assistance

If your bot is doing anything that requires judgment or evaluation of context (e.g., correcting spelling) then you should consider making your bot manually-assisted, which means that a human verifies all edits before they are saved. This significantly reduces the bot's speed, but it also significantly reduces errors.

Disabling the bot

It should be easy to quickly disable your bot. If your bot goes bad, it is your responsibility to clean up after it! You could have the bot refuse to run if a message has been left on its talk page, on the assumption that the message may be a complaint against its activities; this can be checked using the API meta=userinfo query (example). Or you could have a page that will turn the bot off when changed; this can be checked by loading the page contents before each edit.

Signature

Just like a human, if your bot makes edits to a talk page on Wikipedia, it should sign its post with four tildes (~~~~). Signatures belong only on talk namespaces with the exception of project pages used for discussion (e.g., articles for deletion).

Bot Flag

A bot's edits will be visible at Special:RecentChanges, unless the edits are set to indicate a bot. Once the bot has been approved and given its bot flag permission, one can add "bot=True" to the API call - see mw:API:Edit#Parameters in order to hide the bot's edits in Special:RecentChanges. In Python, using either mwclient or wikitools, then adding bot=True to the edit/save command will set the edit as a bot edit - e.g. PageObject.edit(text=pagetext, bot=True, summary=pagesummary).

Monitoring the bot status

If the bot is fully automated and performs regular edits, you should periodically check it runs as specified, and its behaviour has not been altered by software changes. Consider adding it to Wikipedia:Bot activity monitor to be notified if the bot stops working.

Open-source bots[edit]

Many bot operators choose to make their code open source, and occasionally it may be required before approval for particularly complex bots. Making your code open source has several advantages:

- It allows others to review your code for potential bugs. As with prose, it is often difficult for the author of code to adequately review it.

- Others can use your code to build their own bots. A user new to bot writing may be able to use your code as an example or a template for their own bots.

- It encourages good security practices, rather than security through obscurity.

- If you abandon the project, it allows other users to run your bot tasks without having to write new code.

Open-source code, while rarely required, is typically encouraged in keeping with the open and transparent nature of Wikipedia.

Before sharing code, make sure that sensitive information such as passwords is separated into a file that isn't made public.

There are many options available for users wishing to make their code open. Hosting the code in a subpage of the bot's userspace can be a hassle to maintain if not automated and results in the code being multi-licensed under Wikipedia's licensing terms in addition to any other terms you may specify. A better solution is to use a revision control system such as SVN, Git, or Mercurial. Wikipedia has articles comparing the different software options and websites for code hosting, many of which have no cost.

Programming languages and libraries[edit]

|

See also: mw:API:Client code and mw:Alternative parsers |

Bots can be written in almost any programming language. The choice of a language depends on the experience and preferences of the bot writer, and on the availability of libraries relevant to bot development. The following list includes some languages commonly used for bots:

Awk

GNU Awk is an easy language for bots small and large, including OAuth.

- Framework and libraries: BotWikiAwk

- Example bots in the GitHub account of User:GreenC at GitHub

Perl

If located on a webserver, you can start your program running and interface with your program while it is running via the Common Gateway Interface from your browser. If your internet service provider provides you with webspace, the chances are good that you have access to a Perl build on the webserver from which you can run your Perl programs.

Libraries:

- MediaWiki::API – Basic interface to the API, allowing scripts to automate editing and extraction of data from MediaWiki driven sites.

- MediaWiki::Bot – A fairly complete MediaWiki bot framework written in Perl. Provides a higher level of abstraction than MediaWiki::API. Plugins provide administrator and steward functionality. Currently unsupported.

PHP

PHP can also be used for programming bots. MediaWiki developers are already familiar with PHP, since that is the language MediaWiki and its extensions are written in. PHP is an especially good choice if you wish to provide a webform-based interface to your bot. For example, suppose you wanted to create a bot for renaming categories. You could create an HTML form into which you will type the current and desired names of a category. When the form is submitted, your bot could read these inputs, then edit all the articles in the current category and move them to the desired category. (Obviously, any bot with a form interface would need to be secured somehow from random web surfers.)

The PHP bot functions table may provide some insight into the capabilities of the major bot frameworks.

| Key people[php 1] | Name | PHP versions confirmed working | Last release | Last master branch update | Uses API[php 2] | Exclusion compliant | Admin functions | Plugins | Repository | Notes |

|---|---|---|---|---|---|---|---|---|---|---|

| Cyberpower678, Addshore, Jarry1250 | Peachy | 5.2.1 | 2013 | 2022 | Yes | Yes | Yes | Yes | GitHub | Large framework, currently undergoing rewrite. Documentation currently non-existent, so poke User:Cyberpower678 for help. |

| Addshore | mediawiki-api-base | 7.4 | 2021 | 2022 | Yes | N/A | N/A | extra libs | GitHub | Base library for interaction with the MediaWiki API, provides you with ways to handle logging in, out and handling tokens as well as easily getting and posting requests. |

| Addshore | mediawiki-api | 7.4 | 2021 | 2022 | Yes | No | some | extra libs | GitHub | Built on top of mediawiki-api-base mentioned above, this adds more advanced services for the API such as RevisionGetter, UserGetter, PageDeleter, RevisionPatroller, RevisionSaver, etc. Supports chunked uploading.

|

| Nzhamstar, Xymph, Waldyrious | Wikimate | 5.3-5.6, 7.x, 8.x |

2023 | 2024 | Yes | No | No | No | GitHub | Supports main article and file stuff. Authentication, checking if pages exist, reading and editing pages/sections. Getting file information, downloading and uploading files. Aims to be easy to use. |

| Chris G, wbm1058 |

botclasses.php | 8.2 | n/a | 2024 | Yes | Yes | Yes | No | on wiki | Fork of older wikibot.classes (used by ClueBot and SoxBot). Updated for 2010 and 2015 API changes. Supports file uploading. |

Python

Libraries:

- Pywikibot – Probably the most used bot framework.

- ceterach – An interface for interacting with MediaWiki

- wikitools – A Python-2 only lightweight bot framework that uses the MediaWiki API exclusively for getting data and editing, used and maintained by Mr.Z-man (downloads)

- mwclient – An API-based framework maintained by Bryan

- mwparserfromhell – A wikitext parser, maintained by The Earwig

- pymediawiki – A read-only MediaWiki API wrapper in Python, which is simple to use.

MATLAB

- MatWiki – a preliminary (as of Feb 2019) MATLAB R2016b(9.1.x) client supporting just bot-logins and semantic #ask queries.

Microsoft .NET

Microsoft .NET is a set of languages including C#, C++/CLI, Visual Basic .NET, J#, JScript .NET, IronPython, and Windows PowerShell. Using Mono Project, .NET programs can also run on Linux, Unix, BSD, Solaris and macOS as well as under Windows.

Libraries:

- DotNetWikiBot Framework – a full-featured client API on .NET, that allows to build programs and web robots easily to manage information on MediaWiki-powered sites. Now translated to several languages. Detailed compiled documentation is available in English.

- WikiFunctions .NET library – Bundled with AWB, is a library of stuff useful for bots, such as generating lists, loading/editing articles, connecting to the recent changes IRC channel and more.

- WikiClientLibrary — is a portable & asynchronous MediaWiki API client library on .NET Standard. (see on nuget, docs).

Java

Libraries:

- Java Wiki Bot Framework – A Java wiki bot framework

- wiki-java – A Java wiki bot framework that is only one file

- WPCleaner – The library used by the WPCleaner tool

- jwiki – A simple and easy-to-use Java wiki bot framework

Node.js

Libraries:

- mwn – A library actively maintained and written in modern ES6 using promises (supporting async–await). This is a large library, and has classes for conveniently working with page titles and wikitext (including limited wikitext parsing capabilities). Also supports TypeScript. See mwn on GitHub.

- mock-mediawiki – An implementation of the MediaWiki JS interface in Node.js. See mock-mediawiki on GitHub.

- wikiapi – A simple way to access MediaWiki API via JavaScript with simple wikitext parser, using CeJS MediaWiki module. See Wikipedia bot examples on GitHub.

Ruby

Libraries:

- MediaWiki::Butt – API client. Actively maintained. See evaluation

- mediawiki/ruby/api – API client by Wikimedia Release Engineering Team. Last updated December 2017, no longer maintained, but still works.

- wikipedia-client – API client. Last updated March 2018. Unknown if still works.

- MediaWiki::Gateway – API client. Last updated January 2016. Tested up to MediaWiki 1.22, was then compatible with Wikimedia wikis. Unknown if still works.

Common Lisp

- CL-MediaWiki – implements MediaWiki API as a Common Lisp package. Is planned to use JSON as a query data format. Supports maxlag and assertion.

Haskell

VBScript

VBScript is a scripting language based on the Visual Basic programming language. There are no published bot frameworks for VBScript, but some examples of bots that use it can be seen below:

- User:Smallman12q/Scripts/cleanuplistingtowiki – Login and give preview of edit

- User:Smallman12q/VBS/Savewatchlist – Login, get raw watchlist, save to file, logout, close IE

- Commons:User:Smallbot#Sources – Several scripts showing the usage of VBScript (Javascript, XMLHTTP, MSHTML, XMLDOM, COM) for batch uploads.

Lua

- During the Lua Annual Workshop 2016, Jim Carter and Dfavro started developing Lua's bot framework for Wikimedia projects. Please contact Jim Carter on their talk page to discuss.

- mwtest is an example using Lua to write a wikibot, created by User:Alexander Misel, with simple API.